Thanks for landing here.I am not going to explain economics here.I will explain step by step process about How to migrate database character set to AL32UTF8.

Oracle recommend to use DMU

tool to convert database character set.Tool can be downloaded using below link.

https://www.oracle.com/database/technologies/appdev/oracle-database-migration-assistant.html

Extract the zip file in a

folder where admin privilege is there.After extracting we can see

dmu32/dmu64.Based on the OS platform click the executable and it will ask for

JDK home.Mention the jdk home path and application will open.

Jdk 8 can be downloaded using

below link in case jdk 8 is not installed in your system.

Please follow below steps for

converting character set.

1.

Execute below

pre-requisite script as sysdba in the database so that it creates SYS.DBMS_DUMA_INTERNAL package

$ORACLE_HOME/rdbms/admin/prvtdumi.plb

2.

Compile invalid

objects by executing $ORACLE_HOME/rdbms/admin/utlrp.sql

3.

Purge

dba_recyclebin

4.

Take full

backup and create guaranteed restore point for recovery

5.

Gahther

database dictionary stats using below command in sqlplus

Exec

dbms_stats.gather_dictionary_stats;

6.

During

conversion phase lots of undo data will be generated and to avoid that we

should add datafile in undo tablespace proactively.Use below SQL script

which will create a job and monitor space in every 15 minutes and add datafile

if required.

***********************************************************************************

set serveroutput on;

create or replace procedure add_datafile as

sumb number;

summaxb number;

vSQL varchar2(300);

begin

for c1 in (select tablespace_name,sum(bytes)/(1024*1024*1024) Freespace from dba_free_space group by tablespace_name)

loop

--dbms_output.put_line(c1.tablespace_name||':'||c1.Freespace);

If c1.Freespace <1 then

dbms_output.put_line(c1.tablespace_name||':'||c1.Freespace);

select sum(bytes)/(1024*1024*1024),sum(maxbytes)/(1024*1024*1024) into sumb,summaxb from dba_data_files where tablespace_name=c1.tablespace_name;

if (sumb/summaxb)*100 >=85 then

dbms_output.put_line('ADDING DATAFILE- IN TABLESPACE '||c1.tablespace_name||'....');

vSQL :='ALTER TABLESPACE '||c1.tablespace_name||' ADD DATAFILE ''+DATA'' SIZE 10G AUTOEXTEND ON MAXSIZE 32767M';

execute immediate vSQL;

end if;

end if;

end loop;

exception

WHEN OTHERS THEN DBMS_OUTPUT.PUT_LINE(SQLERRM);

end;

/

BEGIN

SYS.DBMS_SCHEDULER.DROP_JOB

(job_name => 'SYS.ADD_DATA_FILE');

END;

/

BEGIN

SYS.DBMS_SCHEDULER.CREATE_JOB

(

job_name => 'SYS.ADD_DATA_FILE'

,start_date => TO_TIMESTAMP_TZ('2024/06/07 22:47:25.939518 America/Phoenix','yyyy/mm/dd hh24:mi:ss.ff tzr')

,repeat_interval => 'FREQ=MINUTELY;INTERVAL=15'

,end_date => NULL

,job_class => 'DEFAULT_JOB_CLASS'

,job_type => 'STORED_PROCEDURE'

,job_action => 'sys.add_datafile'

,comments => NULL

);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'RESTARTABLE'

,value => FALSE);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'LOGGING_LEVEL'

,value => SYS.DBMS_SCHEDULER.LOGGING_OFF);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE_NULL

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'MAX_FAILURES');

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE_NULL

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'MAX_RUNS');

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'STOP_ON_WINDOW_CLOSE'

,value => FALSE);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'JOB_PRIORITY'

,value => 3);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE_NULL

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'SCHEDULE_LIMIT');

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'AUTO_DROP'

,value => FALSE);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'RESTART_ON_RECOVERY'

,value => FALSE);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'RESTART_ON_FAILURE'

,value => FALSE);

SYS.DBMS_SCHEDULER.SET_ATTRIBUTE

( name => 'SYS.ADD_DATA_FILE'

,attribute => 'STORE_OUTPUT'

,value => TRUE);

SYS.DBMS_SCHEDULER.ENABLE

(name => 'SYS.ADD_DATA_FILE');

END;

/

**********************************************************************************

7. Create database connection in DMU tool providing host,port and service name

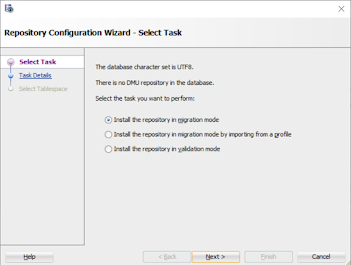

8.

Create migration repository

like below which will create some internal tables under system schema

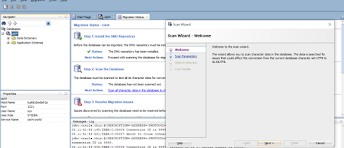

9.

In this step

we will scan the database to find issues before migration and those need to be

fixed before conversion.

This step will run as per 8 parallel thread as we defined

previously.In cist4 it took around 2 hrs of time.

10.

Next step is to review the scan report and

fix issues accordingly.Click on the link

View the scan

report

Followed below note to clear binary representation of

WRI$_ADV_OBJECTS

DMU Frags

WRI$_ADV_OBJECTS.ATTR5 as INVALID DATA (Doc ID 2634488.1)

1) Drop The Statistics Advisor Task

(AUTO_STATS_ADVISOR_TASK) :

DECLARE

v_tname VARCHAR2(32767);

BEGIN

v_tname := 'AUTO_STATS_ADVISOR_TASK';

DBMS_STATS.DROP_ADVISOR_TASK(v_tname);

END;

/

Once the task is dropped then all of its dependent task output data would be

deleted from the WRI$_ADV_OBJECTS table.

Now rerun the scan only for that table and we can see the

invalid representation error is now removed.

11.

Now run bulk cleansing from the object panel

and only for those 4 tables identified

In this step byte representation for

char/varchar column will be converted to char representation.

This process will create huge amount of UNDO

data.So it is advisable to allocate at least 300 GB of undo before this is

run.Otherwise cleansing might fail.

This is

done in restricted mode so no client connection can happen.

Check progress of bulk cleansing using below query

CONN / as sysdba

set PAGES 1000

select TARGET, TO_CHAR(START_TIME,'HH24:MI:SS - DD-MM-YY'), TIME_REMAINING,

SOFAR, TOTALWORK, SID, SERIAL#, OPNAME

from V$SESSION_LONGOPS

where SID in(select SID from V$SESSION where program = 'Database Migration

Assistant for Unicode')

and SOFAR < TOTALWORK

order by START_TIME

/

Once bulk cleansing is done we can see that some rows have

invalid binary representation as shown below.

Click on the number link under Invalid representation

column.

Click on the column heading and change characterset drop

down and see whether color changes to normal.

The most appropriate charset has been found as WE8MSWIN1252.

select owner,count(*) from dba_objects where

status='INVALID' group by owner

run utlrp.sql to compile invalid objects before conversion.

To fix this issue datafile added in system tablespace